Net在Caffe中代表一个完整的CNN模型,它包含若干Layer实例。前面我们已经在第5天内容中看到用ProtoBuffer文本文件(prototxt)描述的经典网络结构如LeNet、AlexNet,这些结构反映在Caffe代码实现上就是一个Net对象。Net其实是相对Blob、 Layer更为复杂的设计,需要沉住气。

Net的基本用法

Net是一张图纸,对应的描述文件为*.prototxt,我们选择Caffe自带的CaffeNet模型描述文件,位于models/bvlc_reference_caffenet/deploy.prototxt。将该文件拷贝到当前工作目录下。

编写测试代码为:

#include <vector>

#include <iostream>

#include <caffe/net.hpp>

using namespace caffe;

using namespace std;

int main(void)

{

std::string proto("deploy.prototxt");

Net<float> nn(proto,caffe::TEST);

vector<string> bn = nn.blob_names(); // 获取 Net 中所有 Blob 对象名

vector<string> ln = nn.layer_names(); // 获取 Net 中所有 Layer 对象名

for (int i = 0; i < bn.size(); i++)

{

cout<<"Blob #"<<i<<" : "<<bn[i]<<endl;

}

for (int i = 0; i < ln.size(); i++)

{

cout<<"layer #"<<i<<" : "<<ln[i]<<endl;

}

return 0;

}

编译(注意这里我们需要安装openblas,具体过程不再这里讲述):

g++ -o netapp net_demo.cpp -I /usr/local/Cellar/caffe/include -D CPU_ONLY -I /usr/local/Cellar/caffe/.build_release/src/ -L /usr/local/Cellar/caffe/build/lib -I /usr/local/Cellar/openblas/0.2.19_1/include -lcaffe -lglog -lboost_system -lprotobuf

注意到这里有一段-I /usr/local/Cellar/openblas/0.2.19_1/include这是为了连接到本地的blas库

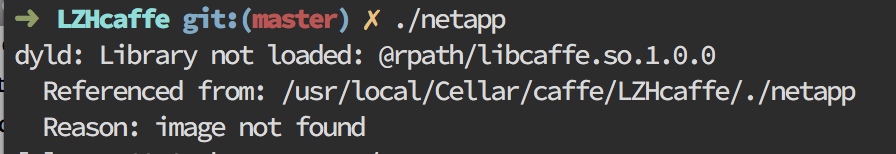

运行 ./netapp :

发现又报错了:

运行install_name_tool -add_rpath '/usr/local/Cellar/caffe/build/lib/' /usr/local/Cellar/caffe/LZHcaffe/./netapp命令,连接库文件.

运行成功后,输出为:

...省略上面部分,之前已经见过了

I0622 11:03:24.688719 3012948928 net.cpp:255] Network initialization done.

Blob #0 : data

Blob #1 : conv1

Blob #2 : pool1

Blob #3 : norm1

Blob #4 : conv2

Blob #5 : pool2

Blob #6 : norm2

Blob #7 : conv3

Blob #8 : conv4

Blob #9 : conv5

Blob #10 : pool5

Blob #11 : fc6

Blob #12 : fc7

Blob #13 : fc8

Blob #14 : prob

layer #0 : data

layer #1 : conv1

layer #2 : relu1

layer #3 : pool1

layer #4 : norm1

layer #5 : conv2

layer #6 : relu2

layer #7 : pool2

layer #8 : norm2

layer #9 : conv3

layer #10 : relu3

layer #11 : conv4

layer #12 : relu4

layer #13 : conv5

layer #14 : relu5

layer #15 : pool5

layer #16 : fc6

layer #17 : relu6

layer #18 : drop6

layer #19 : fc7

layer #20 : relu7

layer #21 : drop7

layer #22 : fc8

layer #23 : prob

通过上面的简单例子,我们发现Net中既包括Layer对象,有包括Blob对象.其中Blob对象用于存放每个Layer输入/输出中间结果.Layer则根据Net描述对指定的输入Blob进行某些计算处理(卷积、下采样、全连接、非线性变换、计算代价函数等),输出结果放到指定的输出Blob中。输入Blob和输出Blob可能为同一个。所有的Layer和Blob对象都用名字区分,同名的Blob表示同一个Blob对象,同名的Layer表示同一个Layer对象。而Blob和Layer同名则不代表它们有任何直接关系。

我们可以通过has_blob()、has_layer()函数来査询当前Net对象是否包含指定名字的Blob或Layer对象,如果返回值为真,则可以进-步调用blob_by_name()、layer_by_name()函数直接获取相应的Blob或Layer指针,进行些操作(如提取某层计算输出特征或某个Blob中的权值)。

数据结构描述

我们这里先了解下caffe.proto:

message NetParameter {

optional string name = 1; // consider giving the network a name

// DEPRECATED. See InputParameter. The input blobs to the network.

repeated string input = 3;

// DEPRECATED. See InputParameter. The shape of the input blobs.(网络的输入Blob名称,可以多个Blob)

repeated BlobShape input_shape = 8;

// 4D input dimensions -- deprecated. Use "input_shape" instead.

// If specified, for each input blob there should be four

// values specifying the num, channels, height and width of the input blob.

// Thus, there should be a total of (4 * #input) numbers.

//(旧版的维度信息)

repeated int32 input_dim = 4;

// Whether the network will force every layer to carry out backward operation.

// If set False, then whether to carry out backward is determined

// automatically according to the net structure and learning rates.

optional bool force_backward = 5 [default = false];

// The current "state" of the network, including the phase, level, and stage.

// Some layers may be included/excluded depending on this state and the states

// specified in the layers' include and exclude fields.

optional NetState state = 6;

// Print debugging information about results while running Net::Forward,

// Net::Backward, and Net::Update.

optional bool debug_info = 7 [default = false];

// The layers that make up the net. Each of their configurations, including

// connectivity and behavior, is specified as a LayerParameter.

repeated LayerParameter layer = 100; // ID 100 so layers are printed last.

// DEPRECATED: use 'layer' instead.

repeated V1LayerParameter layers = 2;

}

看似很短的proto描述,实际上对应的真实网络prototxt可以很长很长,关键在于可重复多次出现的

LayerParameterlayer这个字段。其他字段的功能基本都是辅助网络运行的,在代码中会看到更多的细节。

Net的形成

我们将Blob比作Caffe砖石,Layer比作Caffe的墙面,那么Net更像是工匠手中的图纸,描述了每个墙面应当出现的位置,这样设计的房屋才足够牢固、抗震。为了达到这个目的,Net实现时必然有一套用于记录Layer、Blob的数据结构。在下表中公布一下这些数据结构的名字,错过与它们打交道的机会。

| 类对象 | 含义 |

|---|---|

| layers_ | 记录Net prototxt中出现的每个Layer |

| layer_names_ | 记录Net prototxt中出现的每个Layer的名称 |

| layer_names_index_ | 记录Net prototxt中每个Layer名称与顺序索引的对应关系 |

| layer_need_backward_ | 记录Layer是否需要反向传播过程 |

| blobs_ | 记录Net中所有Blob |

| blob_names_ | 记录每个Blob名称 |

| blob_names_index_ | 记录每个Blob名称与顺序索引的对应关系 |

| blob_need_backward_ | 记录每个Blob是否需要反向传播过程 |

| bottom_vecs_ | blobs_的影子,记录每个Layer的输入Blob |

| bottom_id_vecs_ | 与bottom_vecs_关联,用于在blobs_中定位每个Layer的每个输入Blob |

| bottom_need_backward_ | 与bottom_vecs_关联,标志每个Blob是否需要反向传播过程 |

| top_vecs_ | blobs_的影子,记录每个Layer的输出Blob |

| top_id_vecs_ | 与top_vecs_关联,用于在blobs_中定位每个Layer的每个输出Blob |

| blob_loss_weights_ | Net中每个Blob对损失函数的投票因子,一般损失层为1,其他层为0 |

| net_input_blob_indices_ | Net输入Blob在blobs_中的索引 |

| net_output_blob_indices_ | Net输出Blob在blobs_中的索引 |

| net_input_blobs_ | Net 输入 Blob |

| net_output_blobs_ | Net 输出 Blob |

| params_ | Net权值Blob,用于存储网络权值 |

| param_display_names_ | Net中权值Blob的名称 |

| learnable_params_ | Net中可训练的权值Blob |

| params_lr_ | learnable_params_中每个元素是否有学习速率倍乘因子 |

| has_params_lr_ | 标志learnable_params_中每个元素是否有学习速率倍乘因子 |

| params_weight_decay_ | learnable_params_中每个元素的权值衰减倍乘因子 |

| has_params_decay_ | 标志learnable_params_中每个元素是否有权值衰减倍乘因子 |

看到上面有两类Blob:以param开头的权值Blob和以blob开头的Layer输入/输出Blob。它们虽然都是Blob类型,但在网络中的地位截然不同。权值Blob会随着学习过程而更新,归属于“模型”:Layer输入/输出Blob则只会随网络输入变化,归属于“数据”。深度学习的目的就是不断从“数据”中获取知识,存储到“模型”中,应用于后来的“数据”。

Net声明位于include/caffe/net.hpp中,内容如下:

template <typename Dtype>

class Net {

public:

//显示构造函数

explicit Net(const NetParameter& param);

explicit Net(const string& param_file, Phase phase,

> const int level = 0, const vector<string>* stages = NULL);

//析构函数

virtual ~Net() {}

/// @brief Initialize a network with a NetParameter.

void Init(const NetParameter& param);

//运行前向传播,输入Blob已经领先填充

const vector<Blob<Dtype>*>& Forward(Dtype* loss = NULL);

/// @brief DEPRECATED; use Forward() instead.

const vector<Blob<Dtype>*>& ForwardPrefilled(Dtype* loss = NULL) {

LOG_EVERY_N(WARNING, 1000) << "DEPRECATED: ForwardPrefilled() "

<< "will be removed in a future version. Use Forward().";

return Forward(loss);

}

/**

* The From and To variants of Forward and Backward operate on the

* (topological) ordering by which the net is specified. For general DAG

* networks, note that (1) computing from one layer to another might entail

* extra computation on unrelated branches, and (2) computation starting in

* the middle may be incorrect if all of the layers of a fan-in are not

* included.

*/

//前向传播的几种形式

Dtype ForwardFromTo(int start, int end);

Dtype ForwardFrom(int start);

Dtype ForwardTo(int end);

/// @brief DEPRECATED; set input blobs then use Forward() instead.

const vector<Blob<Dtype>*>& Forward(const vector<Blob<Dtype>* > & bottom,

Dtype* loss = NULL);

//清零所有权值的diff域,应在反向传播之前运行

void ClearParamDiffs();

//几种不同形式的Net反向传播,无须指定输入/输出Blob,因为在前向传播过程中已经建立连接

void Backward();

void BackwardFromTo(int start, int end);

void BackwardFrom(int start);

void BackwardTo(int end);

//对Net中所有Layer自底向上进行变形,无须运行一次前向传播就可以计算各层所需的Blob尺寸

void Reshape();

//前向传播+反向传播,输入为Bottom Blob,输出为loss

Dtype ForwardBackward() {

Dtype loss;

Forward(&loss);

Backward();

return loss;

}

//根据已经(由Solver)准备好的diff值更新网络权值

void Update();

/**

* @brief Shares weight data of owner blobs with shared blobs.

*

* Note: this is called by Net::Init, and thus should normally not be

* called manually.

*/

void ShareWeights();

/**

* @brief For an already initialized net, implicitly copies (i.e., using no

* additional memory) the pre-trained layers from another Net.

*/

//从1个已训练好的Net获取共享权值

void ShareTrainedLayersWith(const Net* other);

// For an already initialized net, CopyTrainedLayersFrom() copies the already

// trained layers from another net parameter instance.

/**

* @brief For an already initialized net, copies the pre-trained layers from

* another Net.

*/

void CopyTrainedLayersFrom(const NetParameter& param);

void CopyTrainedLayersFrom(const string trained_filename);

void CopyTrainedLayersFromBinaryProto(const string trained_filename);

void CopyTrainedLayersFromHDF5(const string trained_filename);

/// @brief Writes the net to a proto.

// 序列化一个 Net 到 ProtoBuffer

void ToProto(NetParameter* param, bool write_diff = false) const;

/// @brief Writes the net to an HDF5 file.

//序列化一个Net到HDF5

void ToHDF5(const string& filename, bool write_diff = false) const;

/// @brief returns the network name.

inline const string& name() const { return name_; }

/// @brief returns the layer names

inline const vector<string>& layer_names() const { return layer_names_; }

/// @brief returns the blob names

inline const vector<string>& blob_names() const { return blob_names_; }

/// @brief returns the blobs

inline const vector<shared_ptr<Blob<Dtype> > >& blobs() const {

return blobs_;

}

/// @brief returns the layers

inline const vector<shared_ptr<Layer<Dtype> > >& layers() const {

return layers_;

}

/// @brief returns the phase: TRAIN or TEST

inline Phase phase() const { return phase_; }

/**

* @brief returns the bottom vecs for each layer -- usually you won't

* need this unless you do per-layer checks such as gradients.

*/

//返回每个Layer的Bottom Blob

inline const vector<vector<Blob<Dtype>*> >& bottom_vecs() const {

return bottom_vecs_;

}

/**

* @brief returns the top vecs for each layer -- usually you won't

* need this unless you do per-layer checks such as gradients.

*/

//返回每个Layer的Top Blob

inline const vector<vector<Blob<Dtype>*> >& top_vecs() const {

return top_vecs_;

}

/// @brief returns the ids of the top blobs of layer i

inline const vector<int> & top_ids(int i) const {

CHECK_GE(i, 0) << "Invalid layer id";

CHECK_LT(i, top_id_vecs_.size()) << "Invalid layer id";

return top_id_vecs_[i];

}

/// @brief returns the ids of the bottom blobs of layer i

inline const vector<int> & bottom_ids(int i) const {

CHECK_GE(i, 0) << "Invalid layer id";

CHECK_LT(i, bottom_id_vecs_.size()) << "Invalid layer id";

return bottom_id_vecs_[i];

}

inline const vector<vector<bool> >& bottom_need_backward() const {

return bottom_need_backward_;

}

inline const vector<Dtype>& blob_loss_weights() const {

return blob_loss_weights_;

}

//返回每个Layer是否需要反向传播计算

inline const vector<bool>& layer_need_backward() const {

return layer_need_backward_;

}

/// @brief returns the parameters

inline const vector<shared_ptr<Blob<Dtype> > >& params() const {

return params_;

}

//返回所有可训练权值

inline const vector<Blob<Dtype>*>& learnable_params() const {

return learnable_params_;

}

/// @brief returns the learnable parameter learning rate multipliers(倍乘因子)

inline const vector<float>& params_lr() const { return params_lr_; }

inline const vector<bool>& has_params_lr() const { return has_params_lr_; }

/// @brief returns the learnable parameter decay multipliers(返回可训练权值的衰减因子)

inline const vector<float>& params_weight_decay() const {

return params_weight_decay_;

}

inline const vector<bool>& has_params_decay() const {

return has_params_decay_;

}

//返回Layer名称与向量下标映射对

const map<string, int>& param_names_index() const {

return param_names_index_;

}

//返回权值所有者

inline const vector<int>& param_owners() const { return param_owners_; }

inline const vector<string>& param_display_names() const {

return param_display_names_;

}

/// @brief Input and output blob numbers

inline int num_inputs() const { return net_input_blobs_.size(); }

inline int num_outputs() const { return net_output_blobs_.size(); }

//返回输入Blob

inline const vector<Blob<Dtype>*>& input_blobs() const {

return net_input_blobs_;

}

//返回输出Blob

inline const vector<Blob<Dtype>*>& output_blobs() const {

return net_output_blobs_;

}

//返回输入Blob下标

inline const vector<int>& input_blob_indices() const {

return net_input_blob_indices_;

}

//返回输出Blob下标

inline const vector<int>& output_blob_indices() const {

return net_output_blob_indices_;

}

//查找当前网络是否包含某一名称Blob

bool has_blob(const string& blob_name) const;

//如果包含,那么就把它找出来

const shared_ptr<Blob<Dtype> > blob_by_name(const string& blob_name) const;

//查找当前网络是都包含某一名称Layer

bool has_layer(const string& layer_name) const;

//如果包含,那么就把它找出来

const shared_ptr<Layer<Dtype> > layer_by_name(const string& layer_name) const;

//设置debug_info_

void set_debug_info(const bool value) { debug_info_ = value; }

// Helpers for Init.(下面这些函数是Init的帮手)

/**

* @brief Remove layers that the user specified should be excluded given the current

* phase, level, and stage.

*/

//过滤掉用户指定的在某个阶段、级别、状态下不应包含的Layer

static void FilterNet(const NetParameter& param,

NetParameter* param_filtered);

/// @brief return whether NetState state meets NetStateRule rule

//判断网络状态是否满足网络规则

static bool StateMeetsRule(const NetState& state, const NetStateRule& rule,

const string& layer_name);

// Invoked at specific points during an iteration

class Callback {

protected:

virtual void run(int layer) = 0;

template <typename T>

friend class Net;

};

const vector<Callback*>& before_forward() const { return before_forward_; }

void add_before_forward(Callback* value) {

before_forward_.push_back(value);

}

const vector<Callback*>& after_forward() const { return after_forward_; }

void add_after_forward(Callback* value) {

after_forward_.push_back(value);

}

const vector<Callback*>& before_backward() const { return before_backward_; }

void add_before_backward(Callback* value) {

before_backward_.push_back(value);

}

const vector<Callback*>& after_backward() const { return after_backward_; }

void add_after_backward(Callback* value) {

after_backward_.push_back(value);

}

protected:

// Helpers for Init.

/// @brief Append a new top blob to the net.

//为网络追加一个Top Blob

void AppendTop(const NetParameter& param, const int layer_id,

const int top_id, set<string>* available_blobs,

map<string, int>* blob_name_to_idx);

/// @brief Append a new bottom blob to the net.

//为网络追加一个Bottom Blob

int AppendBottom(const NetParameter& param, const int layer_id,

const int bottom_id, set<string>* available_blobs,

map<string, int>* blob_name_to_idx);

/// @brief Append a new parameter blob to the net.

//为网络追加一个权值Blob

void AppendParam(const NetParameter& param, const int layer_id,

const int param_id);

/// @brief Helper for displaying debug info in Forward.

void ForwardDebugInfo(const int layer_id);

/// @brief Helper for displaying debug info in Backward.

void BackwardDebugInfo(const int layer_id);

/// @brief Helper for displaying debug info in Update.

//显示权值更新调试信息

void UpdateDebugInfo(const int param_id);

/// @brief The network name

string name_;

/// @brief The phase: TRAIN or TEST

Phase phase_;

/// @brief Individual layers in the net(网络中的独立层)

vector<shared_ptr<Layer<Dtype> > > layers_;

vector<string> layer_names_; //层名称

map<string, int> layer_names_index_; //层名称与索引映射表

vector<bool> layer_need_backward_; //标记某个层是否需要BP

/// @brief the blobs storing intermediate results between the layer.

vector<shared_ptr<Blob<Dtype> > > blobs_; //层与层中间传递数据的管道

vector<string> blob_names_; //Blob名称

map<string, int> blob_names_index_; //Blob名称与索引映射表

vector<bool> blob_need_backward_; //标记某个Blob是否需要BP

/// bottom_vecs stores the vectors containing the input for each layer.

/// They don't actually host the blobs (blobs_ does), so we simply store

/// pointers.

//bottom_vecs_存放每个层的输入Blob,实际上它并不是这些Blob的所有者(所有者为blobs_),只是存放了指针.

vector<vector<Blob<Dtype>*> > bottom_vecs_;

vector<vector<int> > bottom_id_vecs_;

vector<vector<bool> > bottom_need_backward_;

/// top_vecs stores the vectors containing the output for each layer

//top_vecs_存放每个层的输入Blob,实际上它并不是这些Blob的所有者(所有者为blobs_),只是存放了指针.

vector<vector<Blob<Dtype>*> > top_vecs_;

vector<vector<int> > top_id_vecs_;

/// Vector of weight in the loss (or objective) function of each net blob,

/// indexed by blob_id.

//每个Blob队全局损失函数的贡献权重

vector<Dtype> blob_loss_weights_;

vector<vector<int> > param_id_vecs_;

vector<int> param_owners_;

vector<string> param_display_names_;

vector<pair<int, int> > param_layer_indices_;

map<string, int> param_names_index_;

/// blob indices for the input and the output of the net

//网络输入/输出Blob的索引

vector<int> net_input_blob_indices_;

vector<int> net_output_blob_indices_;

vector<Blob<Dtype>*> net_input_blobs_;

vector<Blob<Dtype>*> net_output_blobs_;

/// The parameters in the network.(网络权值)

vector<shared_ptr<Blob<Dtype> > > params_;

//可训练的网络权值

vector<Blob<Dtype>*> learnable_params_;

/**

* The mapping from params_ -> learnable_params_: we have

* learnable_param_ids_.size() == params_.size(),

* and learnable_params_[learnable_param_ids_[i]] == params_[i].get()

* if and only if params_[i] is an "owner"; otherwise, params_[i] is a sharer

* and learnable_params_[learnable_param_ids_[i]] gives its owner.

*/

//从params到learnable_params_的映射

//当且仅当params_[i]为所有者时,learnable_param_ids_.size() == params__.size()以及 learnable_params_[learnable_parara_ids_[i]] == params_[i].get()成立

//否则,params_[i]只是1个共享者,learnable_params_[learnable_param_ids_[i]]给出了它的所有者

vector<int> learnable_param_ids_;

/// the learning rate multipliers for learnable_params_

vector<float> params_lr_;

vector<bool> has_params_lr_;

/// the weight decay multipliers for learnable_params_(权值衰减因子)

vector<float> params_weight_decay_;

vector<bool> has_params_decay_;

/// The bytes of memory used by this net(记录网络占用的内存大小)

size_t memory_used_;

/// Whether to compute and display debug info for the net.(是否显示调试信息)

bool debug_info_;

// Callbacks

vector<Callback*> before_forward_;

vector<Callback*> after_forward_;

vector<Callback*> before_backward_;

vector<Callback*> after_backward_;

//禁用拷贝构造函数,赋值运算函数

DISABLE_COPY_AND_ASSIGN(Net);

};

} // namespace caffe

#endif // CAFFE_NET_HPP_

这里关于Net的头文件学习就到这里,后续学习相关的实现代码(cpp文件)

机制和策略

首先caffe中的Net/Layer/Blob是一种分层设计模式

在我们生活中普遍存在但又最容易被忽视的两个概念是:机制和策略.

一般来说,对于某客观事物.机制回答了“它能干啥”这个问题,策略则回答了“它怎么用”这个问题。

回到Caffe源码上,我们发现Blob提供了数据容器的机制;而Layer则通过不同的策略使用该数据容器,实现多元化的计算处理过程,同时又提供了深度学习各种基本算法(卷积、下采样、损失函数计算等)的机制;Net则利用Layer这些机制,组合为完整的深度学习模型,提供了更加丰富的学习策略。后面我们还会看到,Net也是一种机制。

在阅读源码时,时刻记得目标是希望看到高层策略,还是底层机制?